Resources Data and measurement

The premium internet: where brands can reach audiences and activate first-party data

Select your country or region to see content tailored to your country.

Recruiting fraud is a growing issue for many companies.

The Trade Desk takes this issue seriously and is taking steps to address it.

Data and measurement

Most marketers want to answer one key question: Did my ads actually cause new-customer behavior, or would the people who saw my ads have converted anyway? Luckily, you can answer those questions — and more — with Conversion Lift experiments on The Trade Desk.

Most marketers want to answer one key question: Did my ads actually cause new-customer behavior, or would the people who saw my ads have converted anyway?

Even the most advanced attribution models don’t resolve this question. Sure, they can help you understand how many of the people you reached went on to make a conversion. This can lead to helpful insights about your audience, including where to find them and how they are interact with your brand. But at the end of the day, many marketers use last-touch attribution, which only shows a correlation between an ad exposure and a conversion; it doesn’t measure causation or incremental lift. In other words, it doesn’t tell you if seeing your ad actually caused a person to convert.

This is where Conversion Lift comes in. By using Conversion Lift experiments to measure campaigns on The Trade Desk, you can understand how your ads are driving incremental results. You can answer questions like:

Conversion Lift uses test and control groups to compare the behavior of people who were exposed to your ads to other people from your target audience who were not exposed to your ads.

We’re essentially running an experiment to see if the variable of serving an ad to someone causes them to take a specific action — in this case, engaging with your brand in some way, whether that’s going to your website, visiting a store, or buying a product.

With some advertising platforms, lift experiments require a certain level of investment in public service announcement (PSA) ads. This is not the case with our solution. We use a process sometimes known as ghost bidding. Once a user is eligible to be bid on by a client’s ad media, they are randomly assigned to either a test or control group. If a user is assigned to the test group, we place a bid to show the user the ad; otherwise, we mark that we would have bid and they’re put into the control group (aka holdout). This means you don’t have to set aside any of your media budget for PSA ads, as there’s no additional cost to run Conversion Lift.

1. First, you need to define the conversion event you want to measure for the campaign. Ideally the tracking tags are set up and collect data for at least two weeks (ideally a full month) before the experiment begins.

2. You work with your rep at The Trade Desk to set up the campaign, define audience targeting, and adjust the relevant cross-device settings.

3. Our platform then creates randomized statistically sound test and control groups based on your target audience, using our cross-device graph to ensure that all devices for a single user are assigned to the same group. We bid on the test group only, and the control group comprises a similar set of users we would have bid on but don’t.

4. We track conversions and compare the differences in conversion behavior between the test and control groups (which are essentially the same) to analyze, understand, and report on incremental lift in conversion events driven by ad exposure.

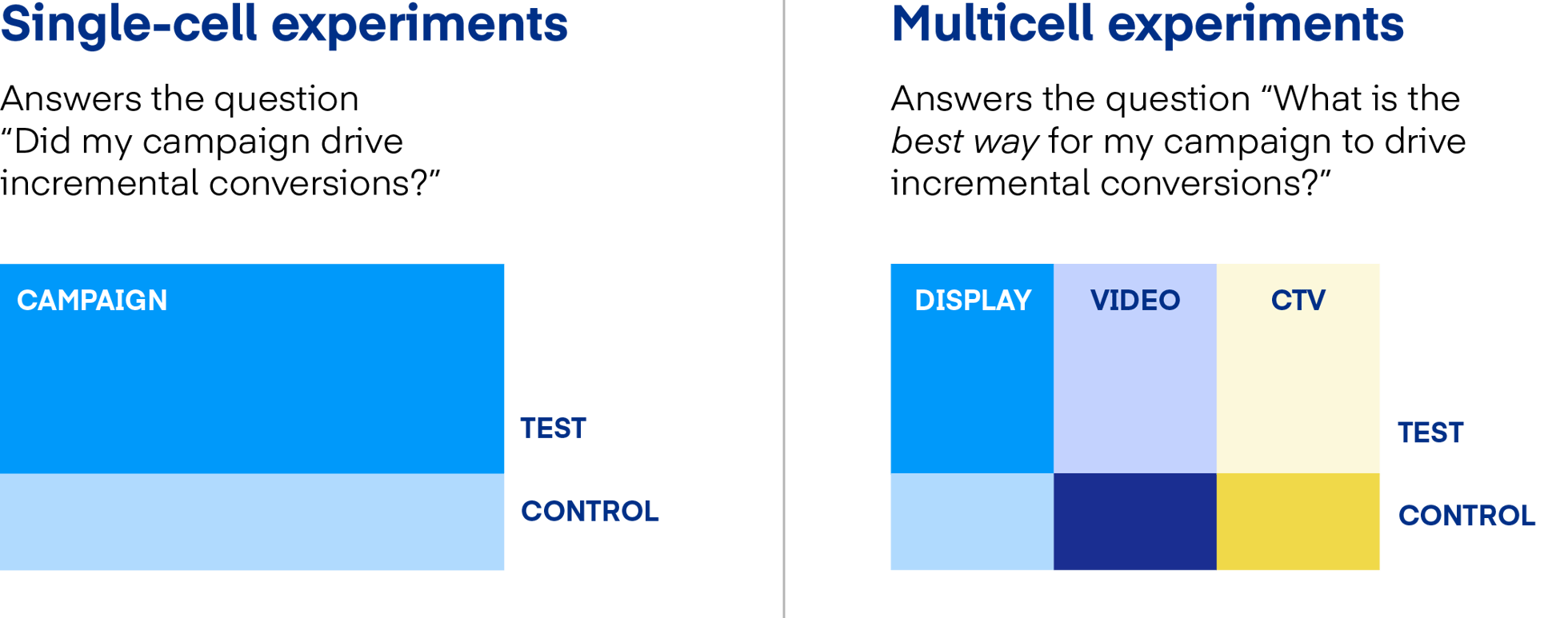

The standard Conversion Lift experiment is called single cell and it helps you understand if your campaign spend is working to drive incremental conversions.

But things really start getting interesting when you consider multicell experiments. These give you the ability to conduct true hypothesis testing in our platform, with experiments that aim to answer the question “What is the best way for my campaign to drive incremental conversions?”

With multicell experiments, you can test a variety of variables:

While Conversion Lift may sound like the perfect solution, it does not always make sense for every advertiser or campaign. For example, if you’re running a mass-reach campaign outside of The Trade Desk, let’s say on linear TV, then it’s likely that the control group will be contaminated due to ad exposure from non-The Trade Desk media. In this case, Conversion Lift may not be the right fit.

Once you’ve determined that it is the right fit, there are some nuances to setting up experiments and interpreting results. Here are some best practices that we recommend:

Whether you’re running single- or multicell experiments, we always recommend implementing a learning agenda by thinking through the hypotheses you want to test with Conversion Lift. Testing different variables can help you understand how your media investment is driving incremental conversions so you can try to achieve more efficient performance and make better investment decisions.

Customer success stories:

To get started, reach out to your account rep at The Trade Desk today.

Resources Data and measurement

Resources Data and measurement

Resources Data and measurement